Translating the nuance of human movement into actionable data is a defining challenge in computer vision. It’s about more than just tracking; it's about understanding intent, form, and interaction in a way that machines can interpret. For developers and innovators looking to build next-generation applications, accessing this technology in a scalable way is critical. This is where Fraime by Saiwa comes in, offering powerful, accessible AI services.

This article breaks down the core techniques, models, and real-world applications of Pose Detection, providing a clear roadmap for harnessing this transformative technology effectively.

Overview of Pose Detection Techniques

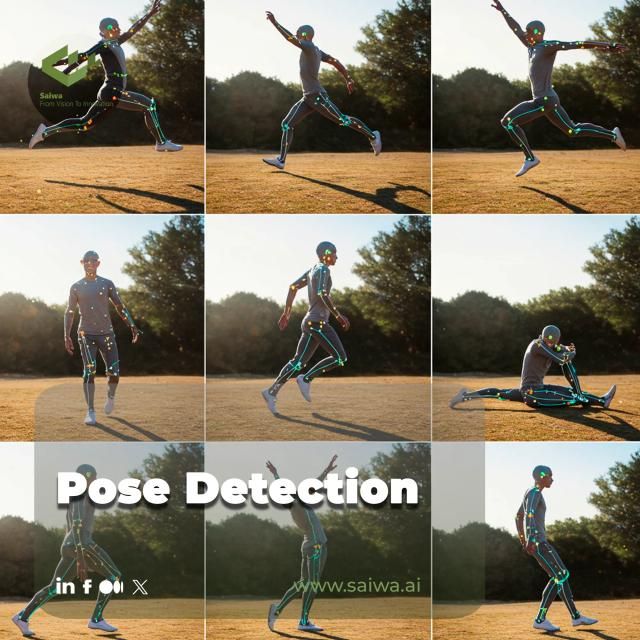

At its core, pose detection works by identifying the coordinates of key body joints—like shoulders, elbows, and knees—in an image or video. These points are then connected to form a skeletal model, a process often referred to as Skeleton detection. This approach is generally categorized into two main methodologies.

2D methods map these keypoints onto a two-dimensional plane, which is ideal for many interactive applications. In contrast, 3D techniques add a depth coordinate, providing a much richer spatial understanding of the human body, essential for complex biomechanical analysis and immersive virtual reality experiences.

Common Models and Toolkits

The rapid evolution of this field is largely thanks to a range of powerful models and toolkits, each designed with specific goals in mind. To get a better sense of the landscape, it helps to look at the tools that developers are actively using today. Below, we'll examine some of the most influential frameworks and lightweight SDKs available.

Notable frameworks:

MoveNet: An ultrafast Google model, available in "Lightning" for maximum speed and "Thunder" for higher accuracy, making it exceptionally versatile for real-time applications.

BlazePose: Also by Google and run on MediaPipe, this model tracks 33 3D keypoints, offering superior depth perception for detailed fitness and wellness analytics.

OpenPose: Developed at Carnegie Mellon, this pioneering bottom-up framework is renowned for its high-precision, multi-person Pose estimation, identifying up to 135 keypoints per person in a single frame.

Lightweight SDKs:

MediaPipe: An open-source, cross-platform framework ideal for deploying models like BlazePose efficiently on mobile (Android/iOS) and web applications.

DeepLobe: A flexible toolkit architected for building and deploying custom deep learning models with relative ease.

Tizen & .NET: Specialized SDKs that extend pose analysis capabilities, allowing integration across a wider ecosystem of devices and enterprise environments.

How Fraime Enables Human Pose Detection

While open-source models provide a strong foundation, implementing them at scale requires significant expertise in managing complex computational infrastructure. Fraime streamlines this entire process.

As an AI-as-a-Service platform, Fraime offers a full suite of optimized and scalable computer vision services, including Pose Detection, via a straightforward REST API. Developers can integrate advanced capabilities like Face detection and object analysis without needing to build or maintain the underlying systems.

This allows teams to focus on creating value for their users, whether they are developing an interactive fitness app or an advanced security system.

Use Cases and Implementation Scenarios

The practical applications of this technology are already reshaping user experiences across various domains. The ability to precisely analyze and respond to human movement unlocks functionalities that were previously out of reach. Here are a few key examples where this technology is making a significant impact:

Fitness and motion feedback: Virtual coaches analyze a user's form during exercises like yoga or weightlifting, providing real-time corrective feedback to enhance performance and prevent injuries.

Rehabilitation and health monitoring: Physical therapists can remotely track a patient’s recovery progress by analyzing their completion of prescribed exercises, ensuring adherence and proper technique.

Human-machine interaction (HCI) and immersive experiences: This technology enables gesture-based controls for everything from interactive displays to AR/VR environments, creating a more natural and intuitive way to engage with digital content.

Conclusion

Pose detection has evolved rapidly from simple 2D keypoint tracking to sophisticated 3D models that can analyze multiple people in real time. Understanding the strengths, limitations, and best practices of different models enables developers and businesses to leverage pose detection to create smarter, more responsive, and impactful solutions.