Real-time human pose estimation presents a persistent challenge, forcing a trade-off between the precision of heavy computational models and the speed required for practical applications. Achieving low-latency, high-fidelity results on accessible hardware is the critical barrier.

At Saiwa, our Fraime platform is engineered to dismantle this barrier, offering developers powerful and optimized computer vision services. This article technically dissects the fusion of Google’s MediaPipe with classical optimization algorithms, revealing how this hybrid approach delivers a robust, efficient, and highly scalable solution for complex pose analysis tasks.

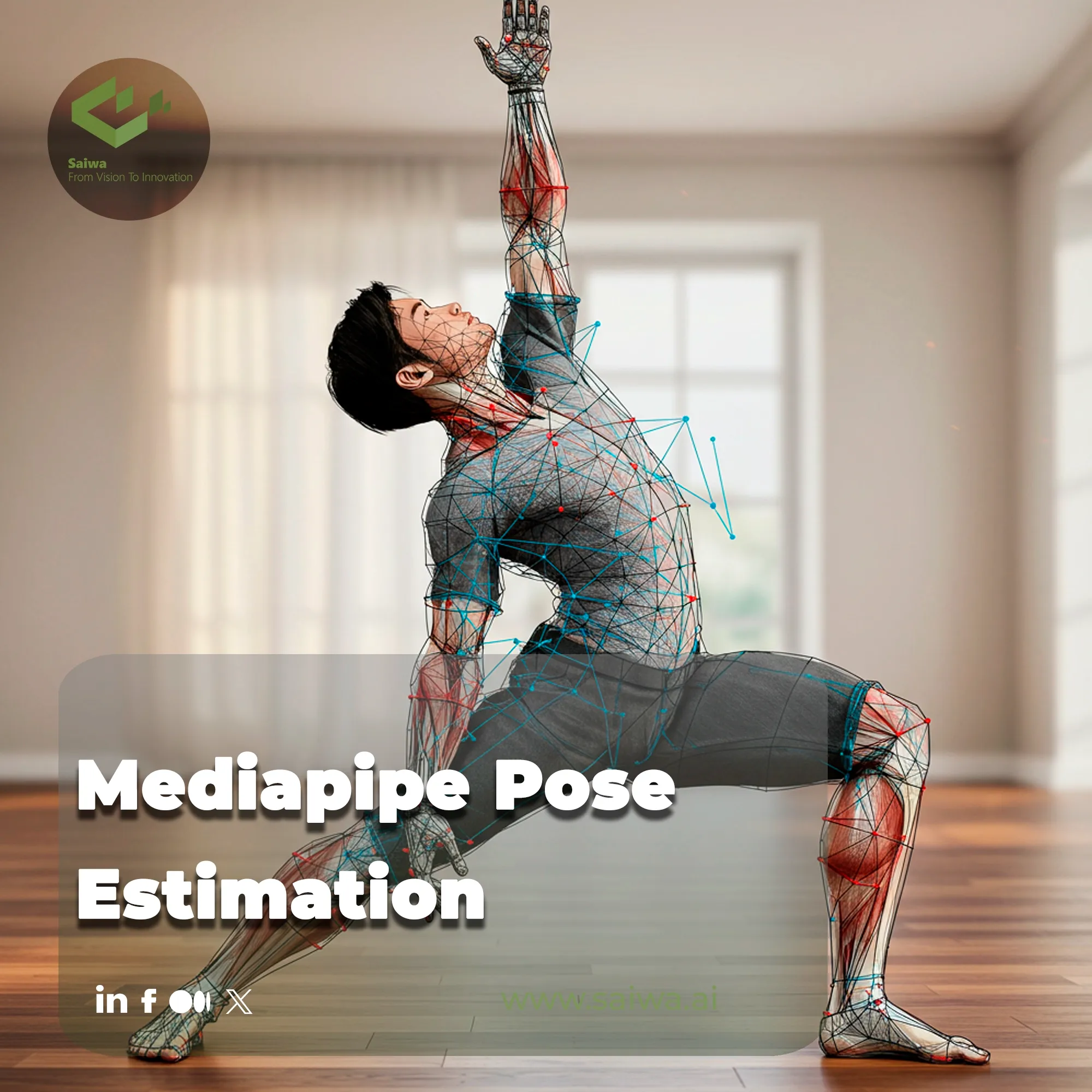

Technologies Powering Advanced Pose Estimation

To fully appreciate the subsequent optimizations, it's essential to first understand the foundational technology that makes this high-speed analysis possible. The system begins with a highly efficient pipeline for identifying human figures in visual data, which is detailed in the components that follow.

MediaPipe: Google’s Real-Time Vision Pipeline

MediaPipe is not a single model but a framework for building processing pipelines. For pose estimation, it utilizes a two-step detector-tracker architecture: first, it locates a person's region of interest (ROI) in the frame, and then a dedicated tracker model infers the landmarks within that ROI, a method that drastically reduces computational overhead in videos.

BlazePose Architecture

The core of MediaPipe's performance is its BlazePose model. This lightweight model, initially designed for mobile devices, excels at generating 33 distinct body landmarks from a simple RGB input, providing the raw 2D coordinate data needed for more advanced analysis.

From 2D to 3D: Hybrid Optimization Approach

While MediaPipe provides fast 2D landmarks, translating them into accurate 3D poses demands a solution that overcomes the inherent limitations of deep learning alone. The answer lies in a hybrid method that combines machine learning output with a classic, model-based optimization algorithm, explored through the following key stages.

Why 2D Alone Isn’t Enough

Relying solely on 2D data or deep learning for 3D conversion leads to depth ambiguity, where different 3D poses can produce identical 2D images. This method also struggles with accurately reconstructing rare or complex poses, such as falls, that may not be well-represented in training datasets.

Humanoid Modeling and the Role of uDEAS

Instead of a neural network, this hybrid approach uses a classical optimization algorithm called uDEAS (univariate Dynamic Encoding Algorithm for Searches). This algorithm fits a sophisticated 3D humanoid model—complete with multi-directional lumbar joints for realistic torso movement—to the 2D points from MediaPipe.

Pose Estimation Pipeline Overview

The process begins with MediaPipe extracting the 2D skeleton. Then, the uDEAS algorithm iteratively adjusts the 3D humanoid model's joint angles to find the configuration whose 2D projection best matches the MediaPipe output.

System Workflow

To ensure physical plausibility, the system adds a unique loss function that penalizes poses where the body's center of mass is not stably supported by the feet, effectively filtering out unnatural or impossible body positions.

Real-Time Performance

Remarkably, this entire optimization process is executed in just 0.033 seconds per frame on a single-board computer, achieving real-time performance without requiring a dedicated GPU.

Applications and Experiments

Daily Activities and Fall Detection

This method has been validated for monitoring daily human activities, showing particular strength in reliably reconstructing asymmetrical and complex poses like falls, a critical function for elderly care systems.

Fitness and Exercise Monitoring

With MediaPipe pose estimation, you can track your body posture and joint angles during workouts. It automatically counts repetitions, such as squats, push-ups, and yoga poses, and provides real-time feedback to help you improve your form and prevent injuries.

Sports Performance Analysis

By analyzing athletes' movements in detail, including joint velocities and angles, coaches can optimize technique, reduce injury risk, and enhance performance.

Visualizing and Validating 3D Reconstruction

The output is a full 3D skeleton that can be visualized and verified, confirming the accurate spatial reconstruction of the person's pose from the original 2D image.

Healthcare and Rehabilitation

Beyond fall detection, pose estimation aids physiotherapy and patient monitoring, allowing medical professionals to objectively track mobility improvements and gait abnormalities over time.

This same principle of applying specialized computer vision, like skeleton detection = link, to monitor dynamic biological systems extends beyond humans, offering potential insights for animal studies, robotics, and agricultural monitoring.

Advantages of Combining MediaPipe with Optimization Techniques

This fusion of a lightweight neural network with a classic optimization algorithm yields distinct advantages over purely deep learning-based methods, creating a more practical and robust system.

Lower Hardware Requirements

The system's efficiency eliminates the need for expensive GPUs, making advanced pose analysis accessible on low-power, edge-computing devices.

Better Generalization for Rare or Complex Poses

By relying on a physical model, the system can accurately reconstruct poses it has never "seen," overcoming a major limitation of models that depend exclusively on training data.

Greater Transparency and Control

The deterministic nature of the uDEAS algorithm makes the process less of a "black box," offering greater control and predictability compared to end-to-end deep learning pipelines.

Conclusion: A Practical, Scalable Pose Estimation System

The synergy between MediaPipe’s efficient 2D detection and a constrained 3D optimization model creates a system that is computationally lean, robustly accurate, and highly scalable. This intelligent blend of technologies makes it possible to build and deploy high-performance pose analysis solutions on accessible hardware, effectively bridging the gap between academic research and practical, real-world applications.

Note: Some visuals on this blog post were generated using AI tools.