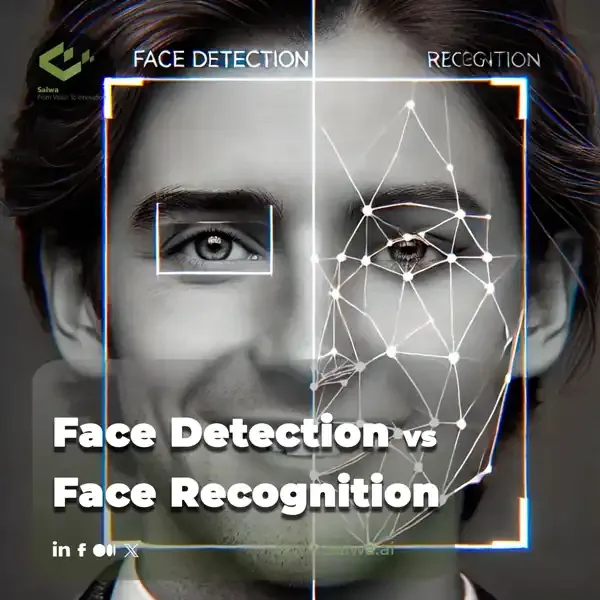

In the rapidly evolving field of computer vision, face detection, and recognition have emerged as two of the most pivotal technologies. Although these terms are often used interchangeably, they serve different purposes and employ distinct methodologies. The process of face detection entails identifying the presence and location of faces within images or videos. In contrast, face recognition involves not only identifying the presence of faces but also verifying or identifying individual identities based on the unique characteristics of the facial features.

At Saiwa, we understand the critical role these technologies play in today's digital landscape. As an AI company that leverages a service-oriented platform to deliver advanced artificial intelligence (AI) and machine learning (ML) solutions, we offer both Face Detection and Face Recognition services designed to meet diverse needs across industries.

Our Face Detection Service at Saiwa is powered by two cutting-edge methods:

Dlib – an open-source, cross-platform library known for its performance and low computational time complexity.

Multitask Cascaded Convolutional Network (MTCNN) – a method that offers superior accuracy, employing the latest advances in deep learning.

For organizations looking to harness the power of face recognition, Saiwa provides a generic Face Recognition Interface built on our robust face detection algorithms. You can explore two distinct approaches within our platform:

Recognition using Dlib face detector

Recognition using MTCNN face detector

Understanding the differences between these technologies is crucial, as each has its unique challenges, applications, and significance in shaping the future of artificial intelligence. From security systems to social media, and from personalized marketing to advanced human-computer interactions, the impact of face detection and recognition is far-reaching and continues to grow.

This article examines the fundamental principles of face detection and recognition, exploring the challenges they face, the algorithms that power them, and the various ways they are transforming industries. Whether you're a tech enthusiast, a developer, or someone interested in the ethical implications of AI, this comprehensive guide will provide you with a deeper understanding of these essential technologies.

What is Face Detection?

Face detection, as its name suggests, focuses on identifying the presence and location of human faces within digital images or video streams. It serves as a fundamental building block for numerous computer vision applications, acting as a precursor to more complex tasks like facial recognition, emotion analysis, and age estimation.

Concept and Objectives

The primary objective of face detection is to answer the question: "Are there any faces present in this image or video, and if so, where are they located?". Achieving this objective involves analyzing the visual data, identifying patterns and features characteristic of human faces, and delineating their boundaries within the image or video frame.

Key Challenges

Despite its seemingly straightforward objective, face detection faces several challenges:

Pose Variation: Human faces exhibit significant variation in pose, with faces appearing in different orientations (frontal, profile, tilted) and under varying lighting conditions.

Occlusion: Faces can be partially or fully obscured by objects like sunglasses, scarves, or other faces, making their detection more challenging.

Image Quality: Factors like low resolution, noise, and blurriness can degrade image quality, hindering the accuracy of face detection algorithms.

Computational Complexity: Processing high-resolution images or video streams in real-time requires significant computational resources, posing challenges for resource-constrained devices.

Common Algorithms and Techniques

Over the years, researchers have developed a plethora of algorithms and techniques for face detection, each with its strengths and limitations. Some of the most common approaches include:

Viola-Jones Algorithm

One of the earliest and most widely used methods, the Viola-Jones algorithm leverages Haar-like features, integral images, and cascade classifiers for efficient face detection.

Histogram of Oriented Gradients (HOG)

HOG features capture the distribution of edge orientations within an image region, providing a robust representation of facial features.

Convolutional Neural Networks (CNNs)

Deep learning-based CNNs have emerged as powerful tools for face detection, achieving state-of-the-art accuracy by learning hierarchical feature representations from vast datasets.

Evaluation Metrics for Face Detection

The performance of face detection algorithms is typically evaluated using metrics such as:

Precision: Measures the proportion of correctly detected faces out of all detected faces.

Recall: Measures the proportion of correctly detected faces out of all actual faces present.

F1-Score: Provides a harmonic mean of precision and recall, offering a balanced measure of overall accuracy.

Frames per Second (FPS): Measures the speed of face detection, which is particularly relevant for real-time applications.

What is Face Recognition?

Face recognition goes a step further than face detection, aiming to identify individuals based on their unique facial features. It involves comparing a given face image or video frame against a database of known faces to determine if a match exists.

Concept and Objectives

The primary objective of face recognition is to answer the question: "Whose face is this?". This involves extracting distinctive facial features from the input image, generating a facial template or representation, and comparing it against templates stored in a database to find the closest match.

Key Challenges

Face recognition faces even greater challenges than face detection due to:

Intra-class Variation: Facial appearance can vary significantly for the same individual due to factors like aging, facial expressions, hairstyles, and accessories.

Inter-class Similarity: Different individuals can share similar facial features, making it challenging to distinguish between them, especially in large databases.

Spoofing Attacks: Face recognition systems are vulnerable to spoofing attacks using photographs, videos, or even 3D masks to impersonate legitimate users.

Common Algorithms and Techniques

Similar to face detection, a wide range of algorithms and techniques have been developed for face recognition, including:

Eigenfaces

This approach uses Principal Component Analysis (PCA) to extract the most significant features (eigenfaces) from a set of face images, representing faces as linear combinations of these eigenfaces.

Fisherfaces

Building upon Eigenfaces, Fisherfaces employ Linear Discriminant Analysis (LDA) to find features that maximize inter-class separation while minimizing intra-class variation.

Local Binary Patterns Histograms (LBPH)

LBPH describes local texture patterns around pixels, providing a robust representation of facial features invariant to lighting changes.

Deep Convolutional Neural Networks (CNNs)

Deep CNNs have revolutionized face recognition, achieving unprecedented accuracy by learning highly discriminative facial features from massive datasets.

Evaluation Metrics for Face Recognition

The performance of face recognition systems is typically evaluated using metrics like:

Accuracy: Measures the proportion of correctly identified faces out of all tested faces.

Verification Rate: Measures the proportion of genuine matches correctly accepted by the system.

False Acceptance Rate (FAR): Measures the proportion of imposter matches incorrectly accepted by the system.

False Rejection Rate (FRR): Measures the proportion of genuine matches incorrectly rejected by the system.

Equal Error Rate (EER): This represents the point where FAR and FRR are equal, providing a single metric for overall system accuracy.

Read Also: The Top Facial Recognition Software | Uncovering Leading AI Solutions

Comparison of Face Detection and Face Recognition

While both face detection and face recognition fall under the umbrella of computer vision, they differ significantly in their objectives, complexities, and applications.

Primary Objectives and Outputs

Feature | Face Detection | Face Recognition |

|---|---|---|

Primary Objective | Locate and delineate faces within an image or video. | Identify individuals based on their facial features. |

Output | Bounding boxes or other markers indicating the location of faces. | Identity or name of the identified individual, along with a confidence score. |

Face Detection

Primary Objective

Locate and delineate faces within an image or video.

Output

Bounding boxes or other markers indicating the location of faces.

Face Recognition

Primary Objective

Identify individuals based on their facial features.

Output

Identify or name of the identified individuals, along with a confidence score.

Computational Complexity

Face recognition is generally more computationally intensive than face detection, as it involves more complex feature extraction and matching processes.

Data Requirements

Face recognition requires a database of labeled face images for training and identification, while face detection can often operate with pre-trained models without requiring specific training data.

Use Cases and Applications

While distinct technologies, both face detection and face recognition find diverse applications across various domains. Face detection, specializing in locating faces within images or videos, proves invaluable for:

Privacy Enhancement: Automatically blurring faces in images or videos to protect individuals' identities.

Social Media Enrichment: Powering automatic image tagging features on social media platforms, simplifying photo organization and sharing.

Security Reinforcement: Forming the basis of access control systems, granting entry only to authorized individuals.

Driver Safety: Detecting driver drowsiness in real-time, alerting drivers, and potentially preventing accidents.

Automated Attendance: Streamlining attendance tracking in classrooms or workplaces, eliminating manual roll calls.

Face recognition, on the other hand, focuses on identifying individuals based on their unique facial features, enabling applications such as:

Seamless Device Access: Facilitating biometric authentication for unlocking smartphones, laptops, or other personal devices.

Secure Transactions: Strengthening security in financial transactions by verifying user identities.

Law Enforcement Assistance: Aiding law enforcement agencies in identifying suspects from surveillance footage or image databases.

Targeted Marketing: Enabling personalized advertising and recommendations by identifying demographics and potentially inferring preferences.

Emotionally Aware Systems: Analyzing facial expressions to discern emotions, paving the way for more emotionally intelligent human-computer interactions.

Read Also: Top Applications of Facial Recognition Technology

Face Detection Techniques

As mentioned earlier, various techniques have been developed for face detection, each with its strengths and limitations. Here's a closer look at some of the most prominent techniques:

Viola-Jones Algorithm

This pioneering algorithm, introduced in 2001, remains widely used today due to its efficiency and robustness. It relies on Haar-like features, which are simple rectangular features that capture contrasting patterns in an image, such as the difference in intensity between adjacent regions.

These features are calculated efficiently using integral images, allowing for rapid image scanning. The algorithm then employs a cascade classifier, a series of simple classifiers trained to reject non-face regions quickly, reducing the computational cost. While effective for frontal face detection, the Viola-Jones algorithm struggles with variations in pose and lighting.

Histogram of Oriented Gradients (HOG)

HOG features, introduced in 2005, provide a more robust representation of facial features compared to Haar-like features. The HOG descriptor divides an image region into small cells, calculating the histogram of gradient orientations within each cell.

These histograms are then concatenated to form the HOG feature vector for the entire region. HOG features are less sensitive to variations in illumination and can handle minor pose changes better than Haar-like features.

Deformable Part Models (DPMs)

DPMs, introduced in 2009, offer a more flexible approach to object detection, including face detection. They model an object as a collection of parts arranged in a deformable configuration. Each part is trained using a separate classifier, and the final detection score is based on the responses of all part classifiers and their spatial relationships. DPMs can handle more significant variations in pose and viewpoint compared to Viola-Jones and HOG, but they are more computationally expensive.

Convolutional Neural Networks (CNNs)

CNNs have revolutionized computer vision, including face detection, achieving state-of-the-art accuracy. CNNs consist of multiple layers of convolutional filters that learn hierarchical feature representations from data. These filters automatically learn to extract relevant features from images, from simple edges and corners in early layers to more complex facial features in deeper layers. CNNs for face detection are typically trained on massive datasets of labeled face images, allowing them to generalize well to different poses, lighting conditions, and occlusions.

Face Recognition Techniques

Similar to face detection, face recognition techniques have evolved significantly, from early geometric-based approaches to modern deep learning-based methods. Here's a closer look at some of the most prominent techniques:

Eigenfaces

Eigenfaces, introduced in 1991, represent one of the earliest approaches to face recognition. This method uses Principal Component Analysis (PCA) to reduce the dimensionality of face images while retaining the most important information. PCA finds the principal components, or eigenvectors, of the covariance matrix of a set of face images. These eigenvectors, known as eigenfaces, represent the directions of maximum variance in the data. Each face image can then be represented as a linear combination of these eigenfaces. Face recognition is performed by projecting a new face image onto the eigenspace and comparing its projection coefficients to those of known faces.

Fisherfaces

Fisherfaces, introduced in 1997, build upon Eigenfaces by incorporating class information into the dimensionality reduction process. Instead of PCA, Fisherfaces use Linear Discriminant Analysis (LDA) to find the projection directions that maximize inter-class separation while minimizing intra-class variation. This makes Fisherfaces more robust to variations in illumination and facial expressions compared to Eigenfaces.

Local Binary Patterns Histograms (LBPH)

LBPH, introduced in 2004, is a texture-based approach to face recognition. It describes the local structure of an image by comparing the intensity of a central pixel to its neighboring pixels. For each pixel, a binary code is generated based on whether its neighboring pixels are brighter or darker. These binary codes are then concatenated to form a histogram, which represents the local texture pattern around the central pixel. LBPH is computationally efficient and relatively robust to variations in illumination and pose.

Deep Convolutional Neural Networks (CNNs)

Similar to their impact on face detection, deep CNNs have revolutionized face recognition, achieving unprecedented accuracy. CNNs for face recognition are typically trained on massive datasets of labeled face images using techniques like triplet loss or contrastive loss. These loss functions encourage the network to learn embeddings that cluster images of the same identity while pushing apart images of different identities. The learned embeddings capture highly discriminative facial features, making CNN-based face recognition systems highly accurate and robust to variations in pose, illumination, and expression.

Data Preprocessing

Both face detection and face recognition systems benefit significantly from data preprocessing, which involves applying various techniques to enhance the quality of input images and improve the performance of subsequent algorithms.

For Face Detection

Illumination Normalization Variations in lighting conditions can significantly affect the appearance of faces, making it challenging for face detection algorithms to perform consistently. Illumination normalization techniques aim to reduce these variations by adjusting the brightness and contrast of images, making them more uniform.

Noise Reduction: Images captured in low-light conditions or with high ISO settings often contain noise, which can degrade the performance of face detection algorithms. Noise reduction techniques, such as Gaussian filtering or median filtering, can be applied to reduce noise while preserving important image details.

Histogram Equalization: Histogram equalization is a technique that adjusts the contrast of an image by distributing pixel intensities more evenly across the histogram. This can improve the visibility of facial features, making it easier for face detection algorithms to identify them.

For Face Recognition

Face Alignment: Face alignment, also known as face registration, involves aligning faces to a standardized pose and scale. This ensures that facial features are in consistent positions across different images, improving the accuracy of feature extraction and matching.

Data Augmentation: Data augmentation involves artificially increasing the size and diversity of the training dataset by applying various transformations to existing images, such as rotations, translations, and flips. This helps improve the robustness of face recognition models to variations in pose, illumination, and expression.

Feature Normalization: Feature normalization involves scaling extracted facial features to a standard range, ensuring that features contribute equally to the matching process. This can improve the accuracy of face recognition, especially when dealing with features extracted using different methods or from images with varying quality.

Machine Learning in Face Detection and Recognition

Machine learning, particularly deep learning, has played a pivotal role in advancing both face detection and face recognition technologies.

Training Data: Machine learning models, especially deep learning models, require vast amounts of labeled data for training. The availability of large-scale face datasets, such as ImageNet, MS-Celeb-1M, and VGGFace2, has been instrumental in training highly accurate face detection and recognition models.

Feature Learning: Deep learning models, particularly CNNs, excel at automatically learning hierarchical feature representations from data. This eliminates the need for handcrafted features, which can be time-consuming to design and may not generalize well to different datasets or conditions.

End-to-End Training: Deep learning models can be trained end-to-end, meaning that the entire pipeline, from raw image input to final output (face detection or recognition), is learned jointly. This allows for optimizing the entire system for the specific task, leading to improved performance.

Real-time Processing

Real-time processing is crucial for many face detection and recognition applications, such as video surveillance, access control, and augmented reality. Achieving real-time performance requires efficient algorithms, optimized implementations, and often specialized hardware.

Edge Computing Solutions

Edge computing, which involves processing data closer to its source, has emerged as a viable solution for real-time face detection and recognition. By deploying models on edge devices, such as smartphones, cameras, or dedicated edge servers, latency can be reduced, bandwidth requirements minimized, and privacy concerns addressed.

Other Cases

Model Compression: Techniques like model pruning, quantization, and knowledge distillation can be employed to reduce the size and computational complexity of face detection and recognition models, making them suitable for deployment on resource-constrained devices.

Hardware Acceleration: Specialized hardware, such as GPUs, FPGAs, and dedicated AI chips, can significantly accelerate the inference speed of face detection and recognition models.

Multi-face Detection and Recognition

Many real-world scenarios involve detecting and recognizing multiple faces simultaneously, such as in crowd surveillance or group photos. Multi-face detection and recognition present additional challenges:

Scale Variation: Faces in a scene can appear at different scales, requiring algorithms to detect faces of varying sizes.

Overlapping Faces: Faces can partially or fully overlap, making it challenging to separate and identify individual faces.

Computational Cost: Processing multiple faces simultaneously increases computational demands, requiring efficient algorithms and optimized implementations.

3D Face Detection and Recognition

Traditional face detection and recognition systems primarily rely on 2D images, which can be sensitive to variations in pose and illumination. 3D face detection and recognition leverage depth information to create more robust and accurate systems.

3D Data Acquisition: 3D facial data can be acquired using various techniques, including structured light, stereo vision, and time-of-flight cameras.

3D Face Modeling: 3D face models capture the shape and geometry of a face, providing a more invariant representation compared to 2D images.

Pose and Illumination Invariance: 3D face recognition is less susceptible to variations in pose and illumination compared to 2D methods, as it relies on the underlying 3D structure of the face.

Face Detection and Recognition in Challenging Conditions

Face detection and recognition systems often encounter challenging conditions in real-world scenarios, such as:

Low Light: Images captured in low light conditions suffer from low contrast and high noise levels, making it difficult to discern facial features.

Occlusion: Faces can be partially or fully obscured by objects like sunglasses, hats, or scarves, hindering the performance of face detection and recognition algorithms.

Motion Blur: Fast-moving faces or camera shake can introduce motion blur, making facial features less distinct.

Integration with Other Computer Vision Tasks

Face detection and recognition often serve as integral components within larger computer vision systems, integrated with other tasks to provide enhanced functionalities:

Facial Landmark Detection

Facial landmarks detection involves locating specific points on a face, such as the corners of the eyes, the tip of the nose, and the corners of the mouth. These landmarks provide valuable information for various applications, including:

Face Alignment: Accurate landmark detection is crucial for aligning faces to a standardized pose, improving the performance of face recognition algorithms.

Facial Expression Recognition: The relative positions of facial landmarks can be used to infer facial expressions, providing insights into emotions and intent.

Head Pose Estimation: The positions of facial landmarks can be used to estimate the orientation of the head, which is valuable for applications like driver monitoring and human-computer interaction.

Conclusion

Face detection and face recognition have emerged as indispensable technologies in various domains, from security and surveillance to entertainment and marketing. While distinct in their objectives and methodologies, these technologies are often interconnected, with face detection serving as a precursor to more complex tasks like face recognition.

As research and development in computer vision continue to advance, we can anticipate even more sophisticated and accurate face detection and recognition systems, further blurring the lines between the digital and physical worlds and shaping the future of human-computer interaction.

Note: Some visuals on this blog post were generated using AI tools.