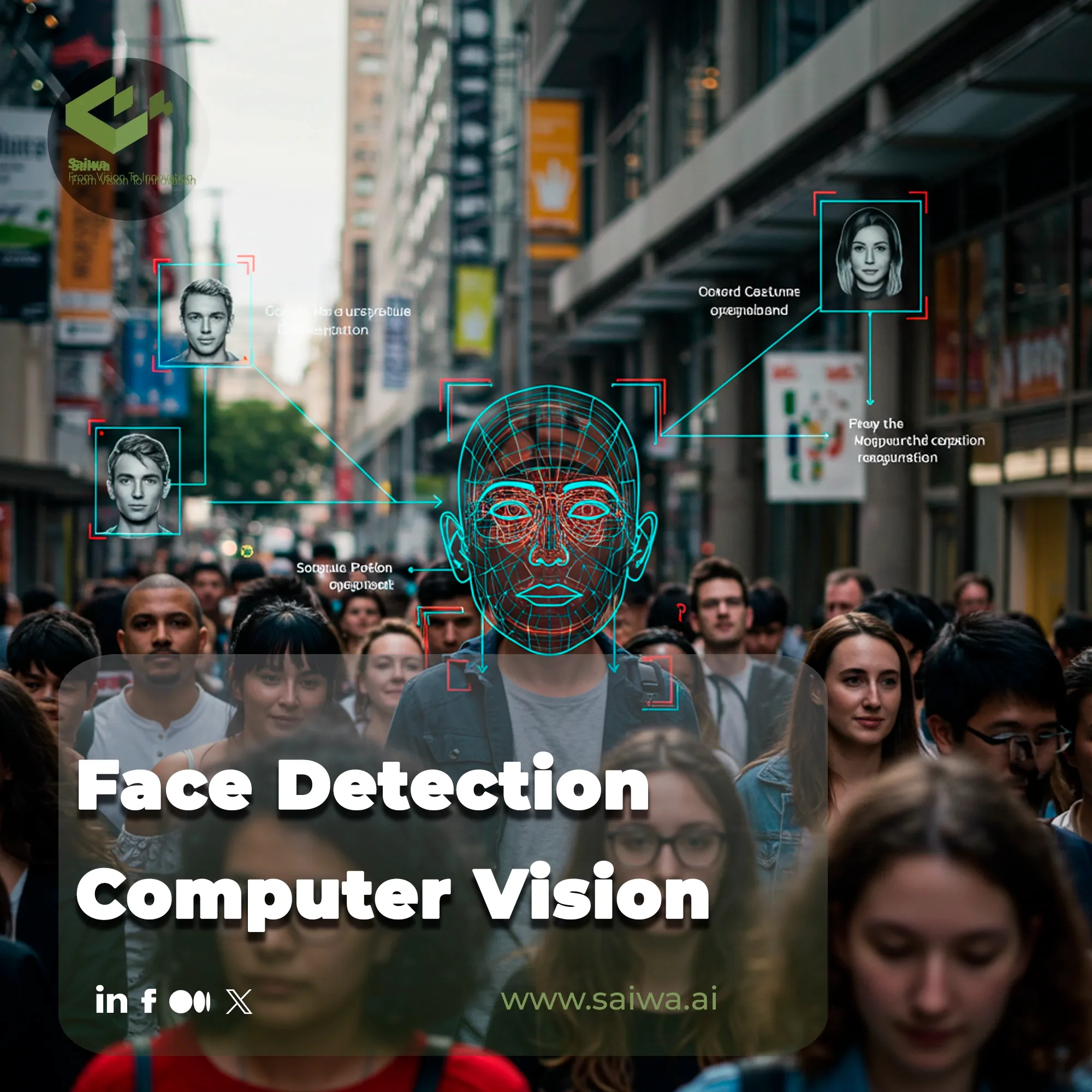

The ability to computationally perceive and locate human faces in a sea of visual data represents a foundational leap in human-computer interaction. This technology transcends simple pattern matching, enabling systems to become responsive and attentive. For developers and engineers seeking to build the next generation of intelligent applications, mastering this field is essential.

Platforms like Saiwa’s Fraime provide the advanced, ready-to-deploy tools to turn this complex challenge into a practical reality. This article dissects the evolution of face detection, from its classical roots to its modern deep learning triumphs.

A Brief History of Face Detection Technology

The first computerized face detection experiments were done in 1964 by American mathematician Woodrow W. Bledsoe, whose team attempted to program computers to recognize faces using a basic scanner. Significant progress came in 2001 when Paul Viola and Michael Jones introduced the Viola-Jones framework, enabling real-time face detection with high accuracy by training models to extract and compare key facial features.

While the algorithm remains in use today, it has limitations with occluded or misoriented faces. In recent years, deep learning techniques, particularly convolutional neural networks(CNN), have greatly advanced face detection, achieving higher accuracy across real-world conditions.

How Does Face Detection Work?

Face detection is the process of locating human faces in images or video. Most systems work in stages:

Image Input and Preprocessing: The system takes an image or video frame and often makes adjustments to make detection easier. These adjustments include resizing, adjusting lighting, and removing noise.

Feature Extraction: The algorithm detects characteristics that are typical of faces, such as the eyes, nose, mouth, and overall face shape.

Classification: The system decides if a region of the image contains a face. This can be done using machine learning classifiers or neural networks that compare the detected features against learned face patterns.

Bounding and Localization: Once a face is detected, the system draws a bounding box around it and locates key facial landmarks.

Traditional Face Detection vs Face Recognition in Computer Vision

While often used interchangeably, detection and recognition are distinct. Face detection is the crucial first step, answering "Where is a face?" by marking its location, typically with a Bounding box annotation.

Recognition then asks, "Whose face is it?". The methods to simply find a face have evolved dramatically over the years. Before the deep learning revolution, several clever, feature-based algorithms dominated the field. Let's briefly look at the cornerstone techniques that paved the way for today's models.

Histogram of Oriented Gradients (HOG)

This technique focuses on the structure and shape of an object. It works by analyzing the distribution of gradient orientations (the intensity and direction of light changes) within localized portions of an image, effectively creating a "signature" for what a human face looks like.

Local Binary Patterns (LBP)

LBP is a texture descriptor. It works at a pixel level, comparing each pixel's intensity to that of its neighbors. By labeling pixels based on whether they are darker or lighter than the central pixel, it generates a binary code that captures granular textures, which are surprisingly effective for describing faces.

Deep Learning–Based Frameworks (CNN based)

Convolutional Neural Networks (CNNs) marked a paradigm shift. Instead of relying on hand-crafted features like HOG or LBP, CNNs learn the relevant features automatically from vast datasets of images, enabling far greater accuracy and robustness to variations in lighting, pose, and expression.

Region Based Convolutional Neural Networks (R-CNN)

R-CNN and its faster successors improved upon basic CNNs by first proposing several potential regions in an image that might contain a face and then running the CNN classifier only on these candidate regions, making the process more efficient.

Applications of Face Detection with Computer Vision

The core principles of Face Detection Computer Vision unlock capabilities that impact safety, efficiency, and business intelligence across industries. As this technology becomes more accessible, its applications are expanding into nearly every facet of our digital and physical worlds. The list below highlights a few key domains where this technology is making a significant impact.

People Counting and Tracking: Monitoring foot traffic in retail stores or public venues.

Queue Time Analytics and Productivity: Optimizing staffing and workflow by analyzing customer wait times.

Accident Prevention and Traffic Signal Detection: Enabling smart transportation systems to monitor driver attention and traffic flow.

Security and Surveillance Systems: Identifying human presence in restricted areas as a primary alert mechanism.

Retail Analytics and Customer Profiling: Gathering anonymized demographic data to understand customer behavior.

Future Trends in Face Detection in Computer Vision

The field continues to evolve at a breathtaking pace, driven by new architectures and learning methodologies. The pursuit is not just for greater accuracy but also for models that are more efficient, require less supervision, and can understand context on a deeper level. Three emerging trends, in particular, signal where the future of this technology is headed.

Transformer-based Detection Models: Originally from natural language processing, transformers are now showing incredible promise in vision, offering a more global understanding of an image compared to the local focus of CNNs.

Multi-modal Face Detection: Integrating other data streams, such as audio from lip movements, to improve detection performance, especially in noisy or occluded environments.

Self-Supervised and Semi-Supervised Learning: Training powerful models with vast amounts of unlabeled data, dramatically reducing the costly and time-consuming process of manual data annotation.

Empowering Face Detection in Computer Vision with Fraime’s AI Solutions

Building a robust detection system from scratch is a significant undertaking. Fraime by Saiwa accelerates this process by providing developers with powerful, pre-trained vision APIs. Instead of grappling with complex model training, you can integrate sophisticated detection capabilities directly into your applications.

This same precision-engineered technology can be leveraged to tackle other complex visual challenges, providing the building blocks needed to develop specialized solutions for analyzing crop health or identifying agricultural pests efficiently.

Conclusion

Face detection has journeyed from heuristic-based classical algorithms to powerful deep learning models that learn from data. It serves as a gateway technology for more advanced tasks like recognition and analysis. As frameworks become more efficient and accessible through platforms like Fraime, its integration will fuel innovation across countless commercial and scientific domains.

Note: Some visuals on this blog post were generated using AI tools.