Autonomous vehicles generate terabytes of sensor data daily from cameras, LiDAR, radar, and GPS systems, yet raw data alone cannot teach machine learning models to navigate safely. Manual labelling methods are not scalable enough to meet the massive annotation requirements for training self-driving systems.

Without precise labels identifying objects, lane markings, and road conditions, autonomous vehicle perception systems cannot reliably interpret complex driving environments. This annotation challenge is one of the fundamental barriers preventing current prototypes from being deployed commercially.

Data annotation transforms raw sensor data into structured training datasets by labeling objects, road features, and environmental conditions that enable machine learning models to recognize patterns and make driving decisions. Skilled annotators mark boundaries around vehicles, pedestrians, and obstacles while identifying lane markings, traffic signals, and road infrastructure that autonomous systems must perceive accurately.

This article tells you about how data annotation enables autonomous vehicle development, the methodologies supporting accurate labeling, and applications across perception systems.

The Critical Role of Annotation in Autonomous Systems

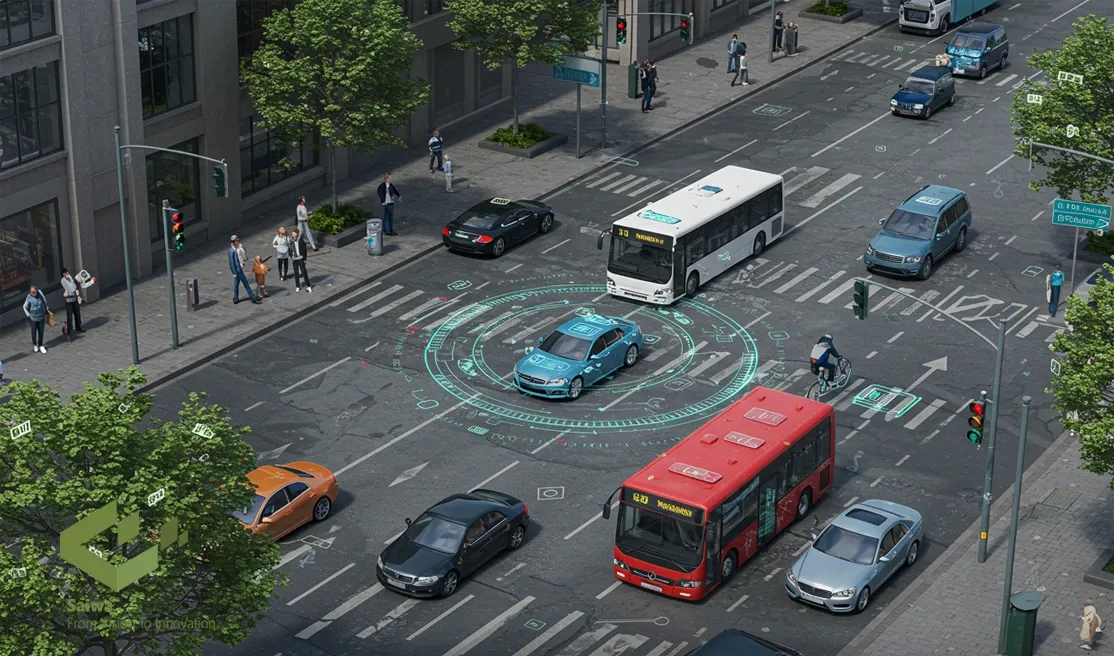

Accurate annotation transforms raw sensor data into labelled examples that machine learning models require to comprehend roads and respond safely. By tagging images, LiDAR points and video frames with information about object types, positions and contextual cues, annotation provides the ground truth that autonomous vehicle (AV) systems learn from, bridging the gap between noisy sensor inputs and reliable on-road behaviour.

- Pedestrian and Obstacle Recognition: Detailed labels of people's poses, trajectories, and relative positions allow AVs to distinguish between stationary obstacles and moving road users. This temporal and spatial information supports intent prediction, such as determining whether a person is likely to cross, and prompts safer and timelier reactions.

- Object Detection and Classification: Labeled datasets teach vehicles to recognise and distinguish objects in their environment, such as cars, bikes, barriers and signs. With consistent annotations, perception models learn to classify objects precisely and prioritise threats, thereby improving decision-making and collision avoidance.

- Lane and Traffic Sign Interpretation: Annotations for lane markings and signage under diverse conditions (rain, glare, worn paint) train AVs to parse road geometry and traffic rules. This helps the system keep proper lane position, make legal maneuvers, and respond correctly to signals and posted limits.

Annotation Methods for Visual Perception Systems

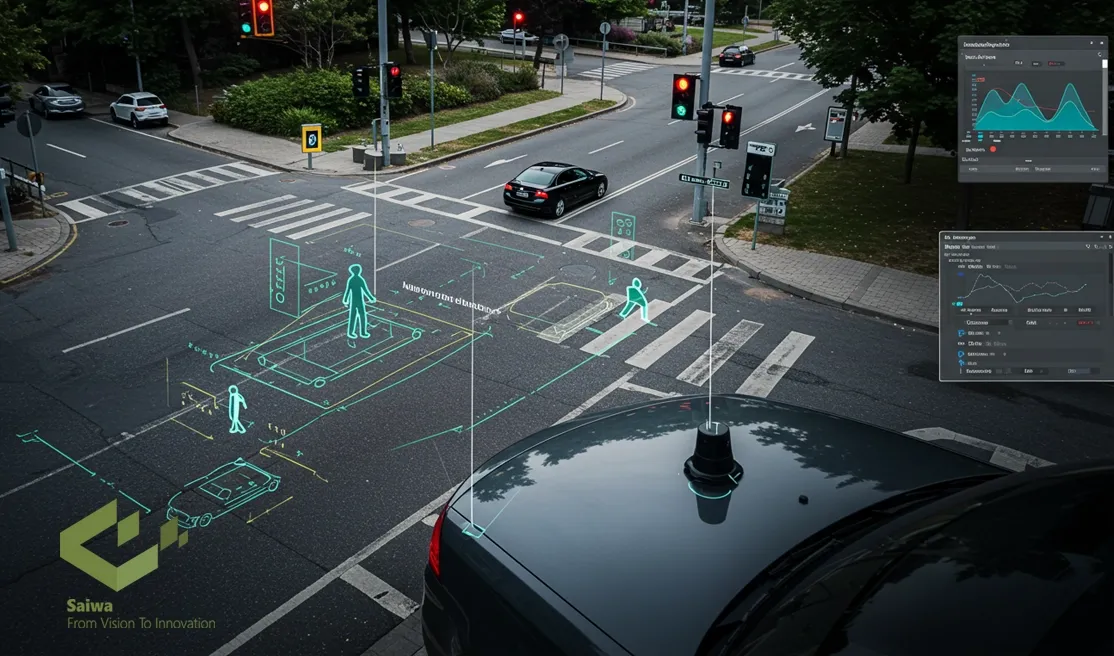

Image annotation is fundamental to the visual perception of autonomous vehicles, enabling camera-based systems to interpret road scenes.

- Bounding Box Annotation: Bounding Box Annotation draw rectangular boxes around objects within images, providing position and approximate size information. This efficient technique suits object detection tasks identifying vehicles, pedestrians, and signs, offering good performance with relatively fast annotation speeds for large datasets.

- Polygon and Segmentation Labeling: For precise object boundaries, annotators trace irregular shapes using connected points forming polygons. Semantic segmentation takes this further by classifying every pixel in images, providing detailed understanding of scene composition, including road surfaces, sidewalks, vegetation, and sky that contextualizes detected objects.

- LiDAR and Radar Annotation: Experts label 3D point cloud data from LiDAR and radar sensors to identify vehicles, pedestrians, and obstacles. This helps AVs perceive depth and shape accurately, even in poor lighting or adverse weather where cameras have limited visibility.

Successful Data Annotation Examples in Autonomous Vehicle Development

Leading innovative companies in the field of autonomous driving have demonstrated that precise data annotation has a direct impact on system accuracy, safety, and decision-making.

These case studies demonstrate how sophisticated annotation techniques, supported by automation and human expertise, are essential for the development of reliable autonomous systems.

By improving the quality of labelled data, these companies are continually pushing the boundaries of perception accuracy, enabling the development of safer, smarter and more adaptive self-driving technology.

Primary Benefits and Technical Constraints

While this technology has many benefits but on the flip side, there are implementation challenges to consider.

Constraints

Several significant obstacles complicate effective annotation for autonomous vehicles:

- Massive Scale Requirements: Training robust autonomous systems demands millions of annotated images and sensor recordings, creating annotation workloads requiring thousands of hours from large annotation teams that challenge project coordination.

- Annotation Consistency Maintenance: Different annotators interpret scenes differently, introducing inconsistencies in how objects are labeled or boundaries drawn. Maintaining uniform annotation standards across large teams requires extensive guidelines, training, and quality oversight processes.

- Complex Object Handling: Partially occluded objects, overlapping vehicles, and cluttered urban scenes challenge annotators to make judgment calls about object boundaries and classifications, introducing ambiguity that affects training data quality.

Benefits

Data annotation delivers essential capabilities enabling autonomous vehicle development:

- Model Performance Foundation: High-quality annotations directly determine perception system accuracy, with precisely labeled training data enabling reliable object detection, lane keeping, and hazard identification that define autonomous vehicle safety.

- Scalable Model Training: Annotated datasets support training across diverse scenarios including weather conditions, lighting variations, and geographic locations, ensuring models generalize beyond initial test environments to handle real-world driving complexity.

- Safety Validation Support: Annotated test datasets provide ground truth for evaluating deployed model performance, enabling quantitative safety assessments measuring how reliably systems detect critical objects and scenarios.

- Specialized Function Development: Targeted annotation supports development of specific capabilities like pedestrian intention prediction or construction zone navigation, allowing focused improvement of perception system components addressing particular challenges.

Final Word

Data annotation serves as the indispensable foundation enabling autonomous vehicles to perceive and navigate complex environments safely. Every object detected, every lane boundary recognized, and every pedestrian behavior predicted stems from precisely labeled training data teaching machine learning models to interpret sensor inputs correctly.

Modern autonomous driving pipelines rely not only on large volumes of labeled data, but on annotation platforms capable of keeping pace with real-world sensor complexity. This is where Saiwa and our Fraime platform meaningfully accelerate development. Fraime provides an integrated annotation ecosystem designed specifically for computer vision tasks used in autonomous vehicle perception—including high-volume image classification, precise bounding-box labeling for multi-class object detection, polygon and pixel-level segmentation for scene understanding, and scalable workflows optimized for LiDAR–camera fusion datasets.

With Fraime, teams can manage massive annotation projects through streamlined interfaces, automated pre-labeling, quality-control review layers, and export formats tailored to modern AV training pipelines (YOLO, COCO JSON, segmentation masks, point-cloud tags, and more). By combining manual precision with AI-assisted suggestions, Fraime reduces annotation time while improving label consistency across large teams.

This allows companies working in autonomous driving to train more accurate perception models, validate safety-critical behaviors, and iterate faster on real-world scenarios without being limited by traditional labeling bottlenecks.

Note: Some visuals on this blog post were generated using AI tools.