Image classification refers to the task of categorizing visual images into one of several predefined classes based on their contents and features. It is a core capability needed for many real-world applications such as facial recognition, medical imaging, defect detection in manufacturing, and more.

In recent years, significant progress has been made in image classification, largely driven by artificial intelligence methods, especially deep learning. However, effectively developing and deploying image classification systems remains challenging given the complexity of visual data. In this blog post, we will provide an overview of AI image classification, common techniques, performance evaluation, and deployment considerations for real-world impact.

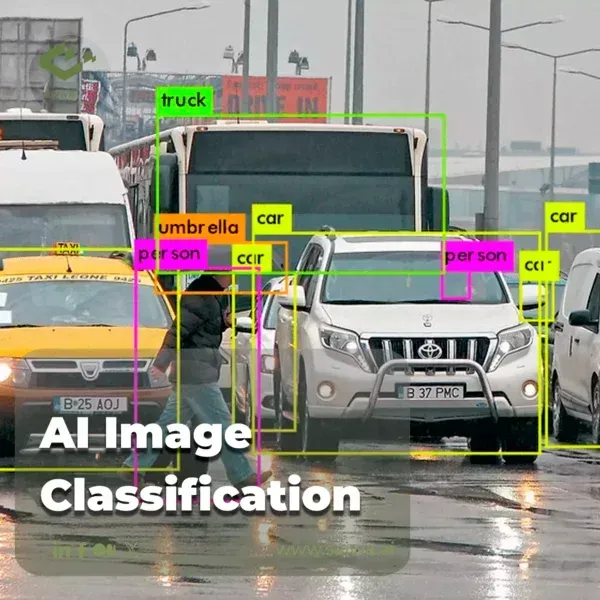

The goal of image classification is to assign a semantic label or class to images according to their contents. Classes can range from generic objects like ‘car’, ‘person’, and ‘animal’ to domain-specific labels relevant to a particular application. Image classification in machine learning enables machines to mimic human visual recognition capabilities at scale for many use cases. However, it is an extremely difficult task. Images can vary significantly in scale, orientation, illumination, occlusion, and more. There may be multiple objects present and contextual relationships that require holistic understanding. The high dimensionality of pixel data also poses computational challenges.

Traditional Machine Learning Approaches

Previous approaches relied on traditional machine learning pipelines. Key steps included manual feature engineering to extract informative features, dimensionality reduction, and training classifiers such as support vector machines (SVMs), random forests, and k-nearest neighbors (kNN) on the features. However, performance was limited due to the challenge of developing hand-crafted features that accurately capture visual semantics and generalize across diverse images. These models have been outperformed by modern deep-learning techniques.

Deep Learning Models

Deep neural networks such as convolutional neural networks (CNNs) now dominate AI image classification tasks. CNNs use convolutions to automatically learn hierarchical feature representations directly from pixels. Based on extensive research and datasets, architectures such as ResNet have achieved major leaps in performance. Transfer learning allows pre-trained models such as VGGNet to be fine-tuned on new datasets, improving accuracy with limited data. The representations learned by deep CNNs far outperform hand-coded features.

Data Augmentation and Regularization

Despite their high capacity, overfitting remains a concern for deep neural networks. Data augmentation techniques like random cropping, flipping, and color shifting artificially expand the training data to teach model invariance. Generative adversarial networks can synthesize realistic images to augment limited datasets. Dropout layers randomly drop inputs to prevent co-adaptation. With sufficient data and regularization, deep networks generalize robustly.

Performance Evaluation Metrics

Quantifying image classification performance relies on metrics like classification accuracy, precision, recall, and F1-score. The confusion matrix aggregates correct and incorrect predictions for each class. For probabilistic models, receiver operating characteristic (ROC) curves evaluate varying discrimination thresholds. Benchmark datasets like ImageNet and CIFAR provide standardized performance measurement and model selection. No single metric dominates, so holistic assessment over training and test data is needed.

Explainability and Interpretability

For safety-critical applications, model interpretability and explainability are paramount. Techniques such as saliency maps, LIME, and SHAP highlight the image regions that most influenced the predictions, providing transparency. This builds confidence in the model and helps identify potential biases. It also makes it easier to diagnose misclassifications. Overall, interpretability enables responsible improvement of image classifiers.

Handling Class Imbalance

Real-world image datasets often have imbalanced class distributions with far more examples for some classes versus others. This skewness biases models towards the majority classes. Solutions include oversampling minority classes and synthetically generating additional data. Loss weighting adjusts model penalties to focus on minority classes. Ensemble methods leverage multiple balanced models. Overall, mitigating class imbalance is vital for accurate multi-class classification.

Video Classification

Beyond static images, video classification aims to categorize video clips based on temporal actions and events depicted. This requires spatiotemporal models to analyze frame sequences. Architectures like 3D CNNs and LSTMs encode motion and temporal context. Video classification enables applications such as human activity recognition, autonomous vehicle perception, and surveillance monitoring. Large labeled video datasets have driven progress in this domain.

Adversarial Attacks and Defense

Recent research has exposed the vulnerability of machine learning models to adversarial examples - inputs that are subtly altered to force misclassification. Adversarial training augments data with generated attacks to improve robustness. Detector networks identify anomalous inputs. Overall, adversarially robust models are critical for safety-critical applications such as self-driving vehicles. Ongoing work focuses on developing certification methods to verify model reliability under attack.

Continual and Lifelong Learning

Humans continuously acquire knowledge and skills over time. Similarly, continual learning aims to enable AI models to incrementally expand their capabilities by adapting to new data classes without forgetting earlier concepts. Architectural techniques include selective neuron gating and dynamic network expansion. Lifelong learning will be key to developing versatile systems that evolve as environments change.

Self-Supervised Representation Learning

Labeled image datasets can be limited in some domains.

Pretraining on Unlabeled Video and Image Data

Self-supervised representation learning is a powerful approach where ML models are pre-trained on large volumes of unlabeled data, such as images and videos. During this phase, models learn to extract meaningful representations without explicit human labeling.

Contrastive Learning and Other Proxy Tasks

Contrastive learning is a popular self-supervised learning technique that trains models to differentiate between similar and dissimilar samples. Proxy tasks like predicting missing parts of an image or the next word in a sentence also play a role in self-supervised learning.

Transferring Representations to Downstream Tasks

The representations learned during self-supervised pretraining can be transferred to downstream tasks, such as AI image classification, object detection, or natural language processing. This transfer of knowledge often results in improved performance and reduced data requirements.

In the fast-evolving landscape of machine learning, addressing adversarial attacks, implementing continual learning, and harnessing self-supervised representation learning are pivotal steps toward building more robust and adaptive AI systems. As researchers and practitioners continue to advance these areas, the potential for AI applications across various domains becomes even more promising.

Production Deployment

Real-world deployment involves optimizing latency, throughput, scalability, and cost-efficiency for large-scale usage. Cloud platforms like AWS, Azure, and GCP offer managed services to deploy models trained with frameworks like PyTorch and TensorFlow. Optimized inference engines handle heavy request loads. Edge computing devices require model compression and efficient inferencing software. Rigorous testing and monitoring are also crucial to ensure robustness. With thoughtful engineering, image classification can transition securely from research into application.

Conclusion

AI Image classification powered by deep learning has unleashed transformative capabilities in computer vision. However, expanding its use in the real world will require addressing challenges ranging from overfitting to interpretability. Responsible practices around data bias and transparency will be critical for trustworthy systems. If implemented thoughtfully, AI-driven image classification can replicate and augment human visual intelligence to deliver immense societal value. The future potential remains wide open as research continues to advance the field.

Note: Some visuals on this blog post were generated using AI tools.